Is there an ethics issue in computer science? (EPQ)

Posted on 2024-09-19 19:08:57

This EPQ tried to explore the courses of modern-day issues as well as how they relate to computer science. I also tried to explore possible solutions to these issues. This EPQ was written in my last year of sixth-form school.

Abstract

This review will be about the possible ethics issues in the Computer Science field. Due to recent events in the news of government spying on citizens, banning encryptions, accusations of rigged elections and the rise of reliance on technology it seemed important to understand why this is happening and what we doing to keep people safe not just in terms of the official legislationg but also as citizens. It became apparent that the industry treats the effects on consumers as an afterthought to profit and control. Specialists responded that in many cases the industry is not externally regulated and it is in many people's and countries' best interests to turn a blind eye to the effects of the industry. This review will include research from newspapers, blogs and interviews with teachers, students, CEOs and people who work in the industry. It will primarily be focused on the dangers and harm the industry is causing to the world as well as why very little is happening to prevent this. This report does not contain any interviews or information directly from Big Tech (Alphabet (Google), Amazon, Apple, Meta, Microsoft, Baidu, Alibaba, Tencent and Xiaomi. These are the five largest information technology companies in America and China). This was due to not getting responses from any corporate emails and no responses from employees via Linked In.

Introduction

I went into this EPQ to find out if there is an issue with ethics when teaching and making productions for an online audience. As well as this, I wanted to narrow down what these issues are. Why these issues still exist and possible solutions. My hypothesis is that there are many ethical issues, and the courses of them are money and ignorance. I want to get the perspectives from people across all parts of the industry, so I planned to speak to students, teachers and people worldwide. I wanted to speak to students and teachers as they are the people who will be shaping the industry in the future. Speaking to people who are currently working in the industry will reveal the root causes of possible issues and review why the industry is not trying to fix these issues. As well as this, I wanted to use be using online articles and online communities to expand on what people have said. The world is heavily reliant on the internet and yet many people don’t understand how it works. Not understanding how something works generally leads to people not questioning companies' actions. When a local store replaces people with self-service machines, we can see the effect of people losing their jobs but when social media changes their algorithms or modifies a single line in a 400-page terms and conditions the end users will have no idea how that affects them. The end goal of this is to have a clear understanding of Who is responsible for issues facing the industry, Why we are allowing these issues to go on and finally why world governments are not creating legislation to protect the general public online.

Companies bottom line

In an interview with Claus Jensby Madsen is a Danish Trade School Computer Systems programming teacher with a master of science, bachelor’s degree and master’s degree of science specialising in coding theory and 32 years of coding experience. He describes how companies operate for profit: “Yes, I don’t think it necessarily is an issue specific to the IT industry, as I see it more as a weakness of capitalism. As well as gives an example of being environmentally friendly: “Companies are generally only environmentally friendly (or pretend to be environmentally friendly) if such an image is beneficial to the company’s goals.” Protecting people’s privacy or looking out for their mental health directly harms their bottom line.

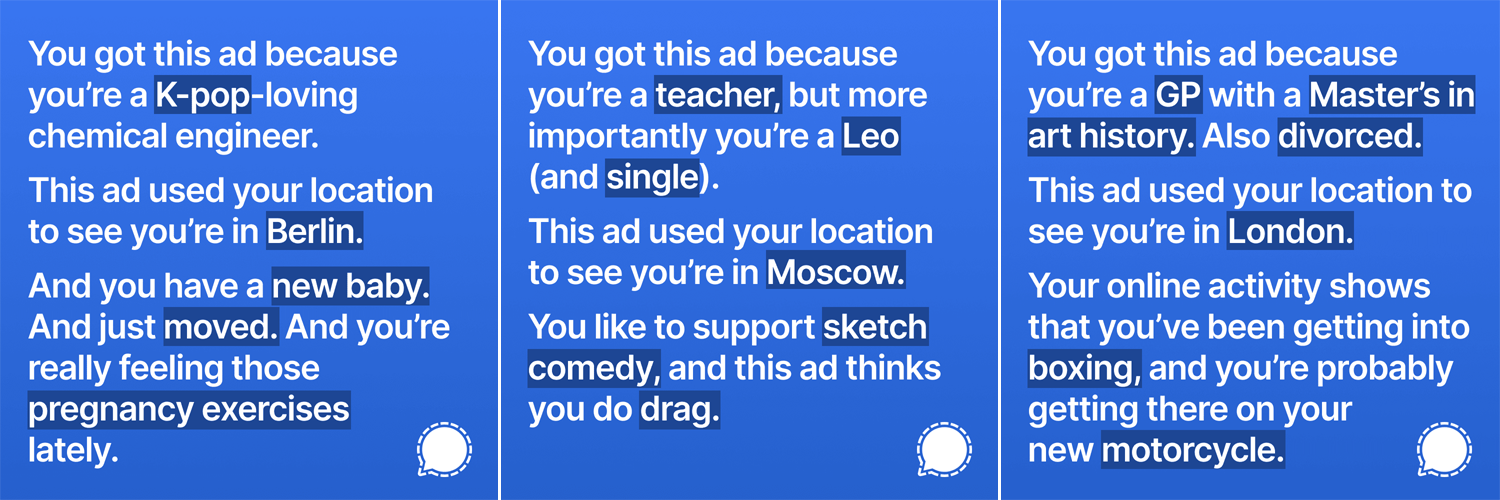

When maximising profits is the only concern for a company.” A company’s main goal is profit and keeping the board happy. Companies do this in many ways one of these methods is a dark pattern. Madsen states “A dark pattern is designed to trick the user into doing something against their own interest. Examples of this are making it hard to distinguish advertisements on search results, hiding additional fees until the end of a purchase process, making it more cumbersome to reject cookies than accept them, etc.” therefore Social media companies use dark patterns to get people to agree to hand over more data. Instagram asks users if they want a more personalised or less personalised feed. This clever wording makes it unclear that you agree to more data being collected, which leads to it being more personalised. Google “Data Sandbox” product that allows them to collect data from any site that you visit on Chrome pop-up read “Got It” and “Settings”. Got it casual wording that makes it seem like people understand the new feature but do not agree to enable it. The way to opt out is to press settings, find the feature where the names have not been said, and disable it. Signal a source messaging app, brought ad spaces from Meta that reflect the kinda of information that’s sold when you agree to dark patterns

The data you agree to hand off includes political beliefs, predicted jobs, relationship type, predicted income, sexual orientation and your address. This is information most people wouldn’t feel comfortable telling people let alone know that it's being sold.

This evidence shows that companies are willing to ignore ethical advertising guidelines by tricking users into providing more data that they can then sell to increase their profit.

“The obsession of almost every tech company has been scaling using and deepening engagement on their platform.” - Joe Seddon, Founder & CEO at Zero Gravity, Forbes 30 Under 30 and Bachelor of Arts degree from Oxford. Social media companies need engagement to make money. A social media goal is to change people to fit their needs. “Tech companies have become incredible vehicles for growing values for shareholders but have the knock-on effects of that have been negative externalities on society.”

Activity and profit are directly correlated. The best way to guarantee someone keeps returning is to build an addiction. Make someone crave dopamine, then supply it with the solution. “The success of an app is often measured by the extent to which it introduces a new habit,” said app developer Peter Mezyk. Social media provides three main things: Instant gratification, destruction of reality and A Fear of missing out.

Train addiction

When someone takes a photo on Instagram, the goal is to post it. Preferably, Instagram wants you to use their camera, not the app on your phone. Instagram uses filters and editing to instantly make your image look better or change it to get emotion. This releases endorphins, creating positive reinforcement.

Whether it’s a long class or a bad day at work, people look for ways to distract themselves from life. This takes the form of TV, Drinking, Books, video games and now social media. The danger of social media is that it’s always on you, sending you little notifications throughout the day. These notifications get the idea in your head, becoming a distraction from work. At some point, you open the app and spend half an hour scrolling through cat videos. This provides positive reinforcement while your app is open, but people find it harder and harder to spend time without social media.

Fear of missing out (FoMO) was a term invented to discuss social media’s effects in 2004. “FoMO includes two processes; firstly, perception of missing out, followed up with a compulsive behaviour to maintain these social connections.” (Gupta and Sharma, 2021) People require interaction with others, we herd animals, and part of this is fear of being forgotten, disliked and unwanted. Your brain interprets this as a threat, creating anxiety and stress. Developers know about the effects of social media but choose to modify their apps to play on this fear in people for money.

While it is legal for a company to produce addictive products physical products like tobacco are strictly regulated. Social media can lead to the same effects as physical products as Senator Lindsey Graham said to Mark Zuckerberg. “You have a product that’s killing people.” A product that leads to harm with CEOS knowing it and admitting it but still selling it to teens and children may be legal but it's clearly unethical.

The change in politics

“In retrospect, it was always obvious in some sense these platforms would completely disrupt the way people communicate and have a knock-on effect on the political system.” -Seddon. Targeted ads have become a massive part of politics. Cambridge Analytica is a British political consulting firm that collected the personal data of millions of people without their consent. This data was then used to publish highly targeted ads for political campaigns. The 2016 Donald Trump campaign used adverts generated to target specific people whose data was illegally harvested through sites like Facebook. Also, in 2016, the UK Independence Party used Cambridge Analytica to publish highly targeted adverts to convince voters to leave the EU It's never been easier for a government or politician to find out everything they need to convince you of something. The harder part is collecting the data legally. In 2022 only 8% of US Adults say they get no news from social media (Pew Research Center, 2022) This means 92% of adults receive news through social media. News directly affects the way we perceive politics. 16% of Americans have admitted to sharing news they found out was fake later on. (Barthel, Mitchell and Holcomb, 2016) Fake news is present online and can directly affect the way people think, believe and vote. 78% of people who relied on international news received at least one covid shot while only 57% of Americans who followed what Donald Trump said received at least one covid shot. (Jurkowitz and Mitchell, n.d.) Donald Trump spoke without being fact-checked, especially the older Americans who took what he said at face value, believing COVID was not real. 39% of Americans are confident in their ability to identify fake news. A quarter of Americans have said they have shared fake political news. Social media has become a way to get a larger amount of innocent people to believe you. Previously in time, it has taken a lot of time and money to spread propaganda now the Internet will spread it for you. Cambridge Analytical has broken the FTC Act through the deceptive conduct alleged in the complaint as well as the EU data and privacy safeguarding laws for political gain. Politics online leads to unethical, immoral and illegal breaches of people's privacy to push microtargeted advertising.

Accidental Consequences

“When you're trying to build something from scratch and you don’t have a preexisting user base, and you don’t have a proof of concept, often your concern is getting people.” “Ethics is considered when it’s too late” -Seddon Small tech startups get caught in the excitement of improving growth, then getting funding, then improving engagement, and by the time they slow down, there is a board that must be kept happy and employees to play. The ethics of what they were doing never crossed their mind once until the decisions and the business model couldn’t be changed. When a company is starting, there is a lot of talk about whether something is possible, not whether it should be done. This links into hobbyists a lot as well. “Most hobbyists do not intentionally try to overlook ethical aspects of CS They do not think their actions have any negative consequences.” -- Abdulaziz A Ahmed, A computer science teacher in the UAE. Many hobbyists do stuff because they can or to test their skills. A college kid using AI to generate fake news articles that then hit the front page of big tech blocks (MIT Technology Review, n.d.) The intentions behind this were just curiosity. A lot of the early work on deep fakes was done by hobbies and college students out of curiosity, not relining later on that their work would be used by scammers, propaganda groups and other malicious people. While the hobbyists. never had any attention and never thought about these uses, they still wrote the original program, so are they responsible for the outcomes? Ethics in the hobbyist spaces is not ignored internally nor is it ignored intentally for start-ups. When you have five users a code of conduct doesn’t seem necessary but it becomes a slippery slope as you start to grow and expand. By the time ethical guidelines are needed, it is normally too late.

“By far, the greatest danger of Artificial Intelligence is that people conclude too early that they understand it.” - Eliezer_Yudkowsky

Miranda Mowbray an Honorary Lecturer at the University of Bristol and a researcher at Hewlett Packard, Dr Vahid Heydari Fami Tafeshi, who is a lecturer at Staffordshire University London have spoken about the lack of transparency expertly with AI “types of AI tools allow IT solutions to be produced easily without it being clear exactly how & why the solution works.” “and if a member of the public is adversely affected by one and wants an explanation of why, it may be difficult to provide an explanation.” -- Miranda Mowbray. When we use AI we have to trust the developer and the developer’s decisions. We also have to trust the data that the AI was trained with. The chartered insurance institute released a paper that the training data initially given to an AI led to gender bias. The data the Ais were trained with had men in a job related to logic, or driving reports had fewer crashes. When these same Ais were then getting used to predict insurance rates, being women signified increased the predicted values. Discriminating by gender is illegal in the UK, but because a machine that has no feelings or emotions set the rates for people, the thought of it being biased was not considered. Dr Vahid Heydari has talked about making sure we strip unneeded data before training AI models. If AI is used in medical research using data from real patients should we remove their names and addresses as that does not seem related to the goal but things like gender and family medical history are also personal and identifying data so should this be stripped before being used.

“AI is obviously not transparent to non-coders and can have subtle bugs and security loopholes. I think the quality of code on the Internet is likely to go down in the short term” --Miranda Mowbray. People look at AI as a machine that is always right. AI can only use what it has been taught and there is a lot of wrong and outdated information on the Internet. When an AI is training it can't filter this so will assume that all the information is correct. AI is great at small simple tasks but when a task starts to become too large it relies on incorrect knowledge and guesses that people take as facts. An important thing when developing a website is considering security. New exploits and problems are discovered daily but an AI who stopped learning in 2019 would have no knowledge of this and a person who is not a developer will not know of this or have the skills to fix these issues. Resulting in the Internet having more security issues.

“a decision based solely on automated processing’ that ‘produces legal effects . . . or similarly significantly affects him or her’, the GDPR creates rights to ‘meaningful information about the logic involved’.” (Andrew D Selbst, Julia Powles, Meaningful information) Apart from the EU’s GDPR proposed regulations, any use of AI or ML that directly impacts a person must be understood by a person, allowing for the “right to explanation” about how he or she will be affected and the process that came to the decision. This will force AI users to consider more closely how they use AI and the effects of the training material given to AIs.

People think of AI as this solution to solve all ethical issues, after all, it’s a machine with no feeling how could it discriminate but in many cases, it’s the opposite. Giving AI training materials that fit the coder's bias and beliefs will make a system that is biased and will not act In a way that is fair while it is also nearly impossible to prove that it is biased. This is a system that hides discrimination and this is evidence that ethical consideration is overlooked with the development and implementation of AI.

Regulations Introduction

Collectively, everyone agreed more regulations are needed; however, everyone recognises the challenges behind it. The Internet is a global platform and would require an international front. Companies can move headquarters to get around laws and fines. The other thing is big tech companies have teams of lawyers to find ways around punishments, or in many cases in the USA, people writing the laws also have investments. New laws only harm new players. The people who need to be controlled are those with all the influence.

Using the USA as an example, regulators can be split into a few sets of people. The congressmen repeatedly show us they don’t understand how technology works this has been shown many times with the investigations of Facebook. The other group own big tech stock while in charge of regulating. The New York Times found 97 congress members with conflicts, with Ro Khanna, a Representative of California, having 147 conflicts with investments.

What are governments doing?

The EU is trying to crack down on big tech with massive fines under the DMA (Digital Markets Act). Big tech treats these fines as an operation cost. Apple was fined 8 million euros for illegally collecting data in the EU that was then used for advertising ( huntonprivacyblog LLP, 2023) and (adexchanger, Schiff, 2023 ) . Apple makes 146,560,845.61 Euros a day on average. The fine for collecting data was 5.5% of their daily profit. The whole time, Big tech companies can make more profit by breaking laws and then paying the fines they will.

While the EU is worrying about how data is being used, China, The UK and The USA are in an AI arms race. Through bursaries, they are trying to become the most powerful countries via military and tech.

“If the democratic side is not in the lead on the technology, and authoritarians get ahead, we put the whole of democracy and human rights at risk,” said Eileen Donahoe, a former U.S. ambassador to the U.N. Human Rights Council. AI is becoming more intertwined with politics all the time. With it being used for proofchecking, writing news and creating large-scale online propaganda. As well as that AI is being integrated into traditional weapons with the first successful flight jet flight fully AI-operated (NBC News, n.d.). Or Israel's use of AI turrets ‘Smart Shooter’ to dispute Palestinian protesters while at the moment it uses rubber bullets it has already led to the loss of vision on major injuries. The company making these refer to them as a ‘One Shot—One Hit’ system and are now looking to use AI to make an automated dome system. (Kadam, 2022) These systems remove any respect for humans and empathy. Creating more hostile environments. Israel has previously set up automated tear gas guns around refugee camps that fire without warning with the office's reason being for de-escalation methods (Vigliarolo, n.d.)

Governments are not doing enough to enforce the laws that they write fining a multi-trillion dollar company 5% of their daily profit while bankrupting small start-ups. This prevents fair competition leading to the bigger players getting away with more without a care for ethics because they don’t need to. Some governments are not concerned about the ethics of what they are doing, ignoring the ethics leads to them having more control over their people and more control globally.

International Regulation

Everyone I have spoken to believes an International board to regulate ethics would be a good idea however, everyone believes in practice, it wouldn’t work due to it needing government cooperation. “An example of a failing board is the Entertainment Software Rating Board (ERSB)” – Madsen -- “In the mid-10s, there was a big uproar about the widespread practice of gambling-like monetisation of video games (especially those aimed at kids) and a call for warning labels on video games. Through the ESRB, the industry successfully circumvented this demand by offering a completely impotent substitution by introducing a warning label covering all in-game purchases, blurring the line between the benign and the predatory in-game purchases.” Boards are bad at enforcing regulations and guidelines. There are not many benefits to following laws other than possibly being able to put in advertising. New boards need to be managed by people in the industry; however, when its sole isn’t based on the industry, big players make rules to deliberately hard and stop startups. “Regulation creates barriers for entry and that’s bad for competition. It’s a balancing act”. --Seddon Competition is hard in the tech space. Big tech companies buy out their small competitors to keep market control In the long run, this is bad for the consumers and the industry as it does not promote innovation. When Google was looking into fitness, they brought a fit bit to remove a competitor. Microsoft buys game companies so they have fewer competitors and more market control. “One of the reasons why big companies may be calling for regulation is to pull up the draw bridge to prevent smaller disrupted competitors that could affect their business models.” Microsoft, Google and Open AI have agreed on forming a self-regulatory body to help create policies and introduce laws to keep AI safe. (Fortune, n.d.) and (Milmo and editor, 2023) This creates the danger where big tech can force through anti-competitive laws securing their place and preventing competition. These laws are unlikely to have any real effect due to big tech having legal teams to find loopholes that startups can’t afford.

“The USA in particular already has a huge IT industry and is generally politically hesitant or opposed to introducing additional government regulation, so USA could easily be such a regulation safe haven.” –Madsen Companies can move headquarters to avoid fines or regulation they don’t like. Amazon auctioned their headquarters to the state, which would give them a great tax reduction. Russia has allowed bulletproof hosting (hosting services that are placed in parts of the world where most authorities cannot use take-down requests). This is how ethical grey companies stay running. Shell companies have been used for ages to avoid taxes in a country. Shell companies can also be used to make transactions and avoid sanctions a country uses for regulation.

“There are plenty of organisations that do not practice what they preach.” - Evie Dineva. Apple is very vocal about its stance on privacy and safety. Apple has publicly said it would never hand personal data over to a government body when the FBI had asked for back doors. However, Apple was willing to store all data on Chinese uses in Chinese government-owned data centres. Apple is also willing to agree to data requests from the Chinese government to help with investigations. Apple does not use the same levels of encryption in China due to Chinese regulations allowing Chinese authorities to access data. (Nicas, Zhong and Wakabayashi, 2021)

Why don’t developers do something?

“I don't think ethics are ignored by the developers themselves, but as a cog in the machine, their hands are tied by their manager and by extension the company goals.” –Madsen . Developers have spoken about what they made before from an ethical and mental health standpoint, but it's down to the company to decide what to do. This is one of the reasons most big tech companies have a turnover rate of two years. While there may be a shortage of developers in Big Tech, the jobs are so in demand developers can be seen as disposable (Magnet, 2019). This leads to developers not speaking out about company wrongdoings. The USA has a whistle-blower protection court and a bad reference which makes it hard to get a new programming job. This leads to developers staying quiet and not speaking out. However, there is a movement to change this in the UK. “The British Computing Society, which is a smaller professional organization for UK computer scientists, also has a code of ethical conduct https://www.bcs.org/membership-and-registrations/become-a-member/bcs-code-of-conduct/ and won't endorse UK computer science degrees unless they include content on legal, social and ethical issues.”- Miranda Mowbray While this cannot change how a company is running it puts ethics and legality in the minds of new developers. As new developer work and get promoted they should hopefully bring the ethic into their company.

Ethics is taught across schools and universities. Developers know the effects of what they are doing but speaking about the effects of their work in many cases will stop them from getting promotions, lead to losing their jobs or struggling to get new jobs. Developers can be replaced and they are aware of this, In many cases, it makes more sense to turn a blind eye to ethics to protect yourself.

Conclusion

Overall it is clear that Computer Science has many ethical and legal issues for the goal of gaining more money or control without any concerns for how it affects the end users. Big Tech companies knowingly sell and promote applications that harm people's mental health and create addiction for profit. Governments want more control and data over their people either by passing laws that force companies to share users' messages, locations and profiles or by investing in AI systems to predict people's movements and in some cases automated weapons. In many cases, the people whose job it is to regulate laws have direct conflicts of interest. When companies are allowed to regulate themself and the oversite is allowed to directly invest in these companies it becomes harmful to both parties to enforce the rules.

Universally the people I interviewed agree more legislation is needed and that the legislation needs to be enforced fairly to all companies. At the moment large companies are allowed to operate in a grey area knowing that they will not be prosecuted for breaking the lawing. However small companies regularly have to close due to prosecution. There was also the Universal regard that new legislation is unlikely to ever be implemented effectively and instead, it is down the the consumers to make a difference by voting with their wallets. To stop funding the companies that harm them.

What’s Next

I think this EPQ is a clear example that the people in this industry believe there are issues. For further investigation, I would interview more management roles and people in big tech companies. To do this, I would like to have to turn up to the offices as I had not gotten any reply from emails however I did not have enough time for this. I would have also liked to have spoken to people who regulate the tech industry such as MPs and Regulatory boards such as the BCS.

Bibliography

Confessore, N. (2018). Cambridge Analytica and Facebook: The Scandal and the Fallout So Far. The New York Times. [online] 4 Apr. Available at: https://www.nytimes.com/2018/04/04/us/politics/cambridge-analytica-scandal-fallout.html [Accessed 15 Oct. 2023].

Graham-Harrison, E. and Cadwalladr, C. (2018). Revealed: 50 million Facebook profiles harvested for Cambridge Analytica in major data breach. [online] The Guardian. Available at: https://www.theguardian.com/news/2018/mar/17/cambridge-analytica-facebook-influence-us-election [Accessed 15 Oct. 2023].

Hern, A. (2018). Cambridge Analytica: how did it turn clicks into votes? [online] the Guardian. Available at: https://www.theguardian.com/news/2018/may/06/cambridge-analytica-how-turn-clicks-into-votes-christopher-wylie [Accessed 15 Oct. 2023].

Schleffer, G. and Miller, B. (2021). The Political Effects of Social Media Platforms on Different Regime Types. [online] Texas National Security Review. Available at: https://tnsr.org/2021/07/the-political-effects-of-social-media-platforms-on-different-regime-types/ [Accessed 8 Oct. 2023].

Wike, R., Silver, L., Fetterolf, J., Huang, C., Austin, S., Clancy, L. and Gubbala, S. (2022). Social Media Seen as Mostly Good for Democracy across Many Nations, but U.S. Is a Major Outlier. [online] Pew Research Center’s Global Attitudes Project. Available at: https://www.pewresearch.org/global/2022/12/06/social-media-seen-as-mostly-good-for-democracy-across-many-nations-but-u-s-is-a-major-outlier/ [Accessed 8 Oct. 2023].

Gupta, M. and Sharma, A. (2021). Fear of Missing out: a Brief Overview of origin, Theoretical Underpinnings and Relationship with Mental Health. World Journal of Clinical Cases, [online] 9(19), pp.4881–4889. doi:https://doi.org/10.12998/wjcc.v9.i19.4881.

Parlapiano, A., Playford, A., Kelly, K. and Uz, E. (2022). These 97 Members of Congress Reported Trades in Companies Influenced by Their Committees. The New York Times. [online] 13 Sep. Available at: https://www.nytimes.com/interactive/2022/09/13/us/politics/congress-members-stock-trading-list.html.

MIT Technology Review. (n.d.). A college kid created a fake, AI-generated blog. It reached #1 on Hacker News. [online] Available at: https://www.technologyreview.com/2020/08/14/1006780/ai-gpt-3-fake-blog-reached-top-of-hacker-news/.

NBC News. (n.d.). ChatGPT has thrown gasoline on fears of a U.S.-China arms race on AI [online] Available at: https://www.nbcnews.com/tech/innovation/chatgpt-intensified-fears-us-china-ai-arms-race-rcna71804.

LLP, HAK (2023). CNIL Fines Apple 8 Million Euros Over Personalised Ads. [online] Privacy & Information Security Law Blog. Available at: https://www.huntonprivacyblog.com/2023/01/06/cnil-fines-apple-8-million-euros-over-personalized-ads/.

Pew Research Center (2022). Social Media and News Fact Sheet. [online] Pew Research Center’s Journalism Project. Available at: https://www.pewresearch.org/journalism/fact-sheet/social-media-and-news-fact-sheet/.

Schiff, A. (2023). Meta And Apple Face Fines And Privacy Headwinds In Europe. [online] AdExchanger. Available at: https://www.adexchanger.com/privacy/meta-and-apple-face-fines-and-privacy-headwinds-in-europe/.

Wikipedia Contributors (2019). Cambridge Analytica. [online] Wikipedia. Available at: https://en.wikipedia.org/wiki/Cambridge_Analytica.

Pegoraro, R. (n.d.). The Real Problem Wasn’t Cambridge Analytica, But The Data Brokers That Outlived It. [online] Forbes. Available at: https://www.forbes.com/sites/robpegoraro/2020/10/08/the-real-problem-wasnt-cambridge-analytica-but-the-data-brokers-that-outlived-it/?sh=3b77c7ba26a4 [Accessed 10 Oct. 2023].

Barthel, M., Mitchell, A. and Holcomb, J. (2016). Many Americans Believe Fake News Is Sowing Confusion.

[online] Pew Research Center’s Journalism Project. Available at: https://www.pewresearch.org/journalism/2016/12/15/many-americans-believe-fake-news-is-sowing-confusion/. [Accessed 10 Oct. 2023]

Jurkowitz, M. and Mitchell, A. (n.d.). Americans who relied most on Trump for COVID-19 news among least likely to be vaccinated.

[online] Pew Research Center. Available at: https://www.pewresearch.org/short-reads/2021/09/23/americans-who-relied-most-on-trump-for-covid-19-news-among-least-likely-to-be-vaccinated/.

Fortune. (n.d.). Microsoft, Google, and OpenAI just became charter members of what may be the first true AI lobby. Up next: Lawmakers write the rules. [online] Available at: https://fortune.com/2023/07/26/microsoft-google-openai-anthropic-lobby-frontier-model-forum-regulation/ [Accessed 16 Oct. 2023].

Andrew D Selbst, Julia Powles, Meaningful information and the right to explanation, International Data Privacy Law, Volume 7, Issue 4, November 2017, Pages 233–242, https://doi.org/10.1093/idpl/ipx022

Milmo, D. and editor, DMG technology (2023). Google, Microsoft, OpenAI and startup form body to regulate AI development. The Guardian. [online] 26 Jul. Available at: https://www.theguardian.com/technology/2023/jul/26/google-microsoft-openai-anthropic-ai-frontier-model-forum. [Accessed 16 Oct. 2023]

Nicas, J., Zhong, R. and Wakabayashi, D. (2021). Censorship, Surveillance and Profits: A Hard Bargain for Apple in China. The New York Times. [online] 17 May. Available at: https://www.nytimes.com/2021/05/17/technology/apple-china-censorship-data.html. [Accessed 16 Oct. 2023]

Magnet, P. (2019). Competent Developers Are Underpaid — Here is How to Fix It. Medium. [online] 26 Sep. Available at: https://tipsnguts.medium.com/competent-developers-are-underpaid-here-is-how-to-fix-it-95dea3c19b55 [Accessed 17 Oct. 2023].

NBC News. (n.d.). ChatGPT has thrown gasoline on fears of a U.S.-China arms race on AI. [online] Available at: https://www.nbcnews.com/tech/innovation/chatgpt-intensified-fears-us-china-ai-arms-race-rcna71804.

Vigliarolo, B. (n.d.). Israel sets robotic target-tracking turrets in the West Bank. [online] www.theregister.com. Available at: https://www.theregister.com/2022/11/18/israel_sets_robotic_targettracking_turrets/ [Accessed 18 Oct. 2023].

Kadam, T. (2022). Israel Deploys AI-Powered Remote-Controlled ‘Smart Shooter’ To Disperse Protesters In Palestine. [online] Latest Asian, Middle-East, EurAsian, Indian News. Available at: https://www.eurasiantimes.com/israel-deploys-ai-powered-remote-controlled-smart-shooter/ [Accessed 18 Oct. 2023].

The right to privacy in the digital age. (2022). Available at: https://www.ohchr.org/sites/default/files/documents/issues/digitalage/reportprivindigage2022/submissions/2022-09-06/CFI-RTP-UNESCO.pdf [Accessed 16 Apr. 2023].

Hern, A. (2018). Cambridge Analytica: how did it turn clicks into votes? [online] the Guardian. Available at: https://www.theguardian.com/news/2018/may/06/cambridge-analytica-how-turn-clicks-into-votes-christopher-wylie [Accessed 15 Oct. 2023].

McCabe, D. (2023). Microsoft Calls for A.I. Rules to Minimize the Technology’s Risks. The New York Times. [online] 25 May. Available at: https://www.nytimes.com/2023/05/25/technology/microsoft-ai-rules-regulation.html [Accessed 19 Sep. 2023].

Kayali, L. (2023). Apple fined €8M in French privacy case. [online] POLITICO. Available at: https://www.politico.eu/article/apple-fined-e8-million-in-privacy-case/ [Accessed Sep. 2023].

Schiff, A. (2023). Meta And Apple Face Fines And Privacy Headwinds In Europe. [online] AdExchanger. Available at: https://www.adexchanger.com/privacy/meta-and-apple-face-fines-and-privacy-headwinds-in-europe/ [Accessed 10 Sep. 2023].

Radauskas , G. (2022). UK’s Online Safety Bill says tech bosses could face jail time. [online] cybernews. Available at: https://cybernews.com/news/uk-online-safety-bill-says-tech-bosses-could-be-jailed/ [Accessed 18 Apr. 2023].

McCallum , S. and Vallance, C. (2023). WhatsApp and other messaging apps oppose ‘surveillance’. BBC News. [online] 18 Apr. Available at: https://www.bbc.co.uk/news/technology-65301510 [Accessed 10 Apr. 20203].