My (highly caffeinated) journey to unlock the hidden knowledge of AI.

Posted on 2024-12-24 17:28:00

Welcome to my (highly caffeinated) journey to unlock the hidden knowledge of AI.

I have no idea how AI or ML works, but I have been invited to join an AI research paper, so I'm here to document my learning journey. I started off reading the Blitz PyTorch course, mostly as I had been told it was the fastest way to get from zero knowledge to a working model. Still a long way off publishing a paper, but it's a start.

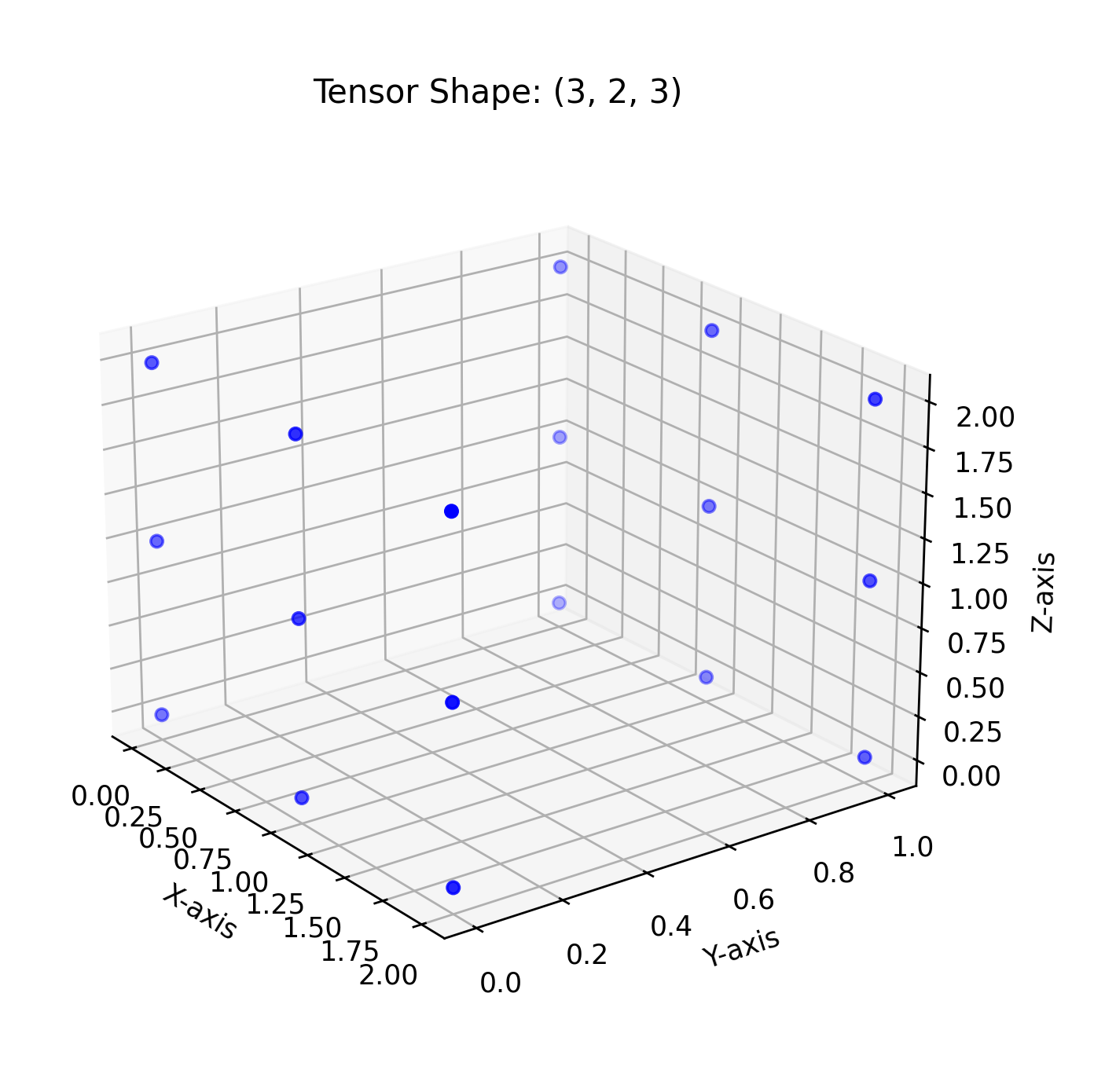

It was going great until the 13th word: "Matrices". After a small side track (thank you, The Organic Chemistry Tutor) and a tea break, we now understand what a matrix is. Time to go back to PyTorch. It turns out that while tensors are a simple concept, it's hard to get my head around. There was something that was not clicking. I put some code together to help me visualise the 3D tensor. So, a tensor with the shape (4, 2, 3).

So, a tensor is a way of storing numbers. A 0D tensor is a single number, like saying x = 9. If you are doing physics or mathematics, it would store a scalar value.

A 1D tensor is similar to an array of numbers (or a list in Python). In maths and physics, it would be a vector.

x = [2, 4, 5, 6]

A 2D tensor (a matrix) is a two-dimensional array of numbers in rows and columns.

x = [[1, 2, 3, 4],

[5, 6, 7, 8],

[9, 10, 11, 12]]

A 3D tensor can be pictured like matrices stacked on top of matrices. The image below is something I made using Matplotlib.

Of course, higher-dimensional tensors exist, but they are hard to picture. I currently don't have a way to draw in the 4th and 5th dimensions. Luckily, computers can just look at the numbers, so we do not need to visualise them.

Tensors seem like they can do a lot of things. They feel powerful, and I love how they can bridge over to NumPy.

Next up on my highly caffeinated journey: autograd. Autograd requires two steps: Forward Propagation and Backward Propagation.

So, Forward Propagation is exactly what you think it is—as long as you think about the way the input data is passed through the multiple layers of a neural network. At each neuron, it makes an educated guess on the output.

Backward Propagation is a bit more complicated. We adjust the parameters used to make the estimates based on the errors in its guess. It does this by traversing backwards through the graph, calculating the gradient, and then changing the parameters to reduce the gradient.

So, autograd keeps track of all the calculations done on a piece of data and creates a list to store the order of each operation. This list is built up in Forward Propagation. Once the list is built, we use Backward Propagation and calculate the gradient at each stage. At each stage, we can then change parameters to lower the gradient and make our model more accurate. Autograd also allows for dynamic computation, meaning we can change the layout and the parameters depending on the input.

The fun stuff. A feed-forward network is a form of multi-layer neural network that takes in inputs, passes them through multiple layers of neurons, and produces an output. It's important to note that there are no loops; all the data is always passed forward. Feed-forward networks always have one input layer, at least one hidden layer, and then one output layer.

To be honest, I have gotten a bit bored of reading and not coding, so let's try to make an OCR model (Optical Character Recognition model) that I can then link up to OpenCV to read characters from my webcam.

So, to start off, we create a class for our dataset. Basically, this is just a fancy way to load an image for our tensor, but we also pass in our transformers (I shall mention this in a minute) as well as a flag called is_training (or at least this is the way I'm doing it). If this flag is true, then we also pass in the label with the image. In addition to deciding what data to pass in (e.g., label and/or no label), we resize all the images to be the same, convert the image into an array, and then finally apply the transformers. Transformers are basically filters applied to an image that will help your model recognise them in more situations, such as adding some small random rotation, changing hue and brightness, as well as greyscaling. While these are not mandatory, they will help reduce the amount of data you need to train your model.

Step two involves loading our data using the class. When we load the data, we specify a batch_size, which is the number of samples (pieces of data) passed through the model (as inputs to the internal neurons) during each epoch. An epoch represents one complete pass through the training dataset, and within it, the model processes the data in smaller groups or "batches". A higher batch size can speed up the learning process, but it may also lead to decreased accuracy in the model.

Epochs are the number of times we loop through the data, and while there is no upper limit, you can do too many loops. Overfitting is when the model does not just learn the patterns but also learns all of the background noise. This means the model can only identify the data it was trained on. There is also a point where the results improve so marginally that there is no point in expending more resources.

In each epoch, we do backtracking and optimisation. Optimisation is the process of trying to reduce the gradient. I have come across two types of optimisation: SGD (Stochastic Gradient Descent) and Adam. SGD updates the model parameters using a fixed learning rate for all parameters, which can be simple but sometimes slow to converge (the point at which a model's performance stabilises, with the loss function reaching a minimal and constant value). There is no point training beyond this. Adam (Adaptive Moment Estimation), on the other hand, adapts the learning rate for each parameter individually, often leading to faster convergence and better performance on complex problems.

So, now that we have our training model, we let it run for a few hours and listen to our laptop cry. (I find this is a good opportunity to go to the gym, pub, or both—trust me, with my laptop, there's time.) Due to this break, it's time for a quick message from our sponsor: ME. There is a small "buy me a coffee" button in the bottom right. I'd greatly appreciate it if you took a look.

Now, time for the most important final step: saving the model. This one line can save your sanity: torch.save(model.state_dict(), 'model.pth').

I may or may not have fully trained my model and then realised I did not have the SINGLE line of code to save my model, leading to a small cry and me having to spend the next FOUR HOURS retraining the model so I could save it.

Finally, using the model. Well, this is straightforward.

Step 1. Load in the model we made earlier.

Step 2. Load in the saved weights that we just trained.

Step 3. Take a photo from OpenCV and pass it through a fun bit of code to make it greyscale.

Step 4. Shove it into the fancy black box AI we made and wait for it to spit out an output.

Step 5. Celebrate that it works.

So, that's a very high-level overview of an OCR model. If you're interested, here is the code. If you have any questions, head over to our Discord.

Next, I need to make an LSTM (Long Short-Term Memory) and an ARIMA (Autoregressive Integrated Moving Average) for my research project. However, here feels like a natural break, so let's make it a two-parter. If you'd like me to make some YouTube videos on this, let me know, and as always, thank you for reading.

If you enjoyed the blog, feel free to join the Discord or Reddit to get updates and talk about the articles. Or follow the RSS feed.